I've been working on a demonstration web site of a meningitis case reporting system in Africa. The current demo uses OpenLayers to show a map of the spatial distribution of cases, and a couple of PNG plots generated from R to show the cases in time for a particular area. One plot showed the cases for the past year, and one was a plot of the previous four weeks with our prediction of the next four weeks cases (plus 95% confidence interval).

But I thought these were a bit static. So I thought I'd add a bit more functionality. Options? Well, I could use my imagemap package for R to create hotspot areas on the plot so users can hover over points and get information, or click on points to zoom to epidemic predictions and so on. But I decided to try a Javascript plotting library instead. There's a few out there, and I settled on using Flot - although jqPlot looks pretty capable too.

Flot lets you plot lines, points and bars, and you can make filled areas by constructing a data series that goes along the top and back along the bottom, completing a polygon. You can shade and style the points and lines, and you get a legend. If your X-axis is time, you can feed it milliseconds and get dates. Here's what I've got to start with:

The buttons at the bottom control the time period. By clicking on 'Prediction', the user can zoom in to see the most recent few weeks, plus our two- and four-week predictions with 50% and 95% confidence interval. Flot also lets you add hover and click events to points:

Here we're showing that our 2-week ahead prediction has a 92% chance of exceeding our 10-case threshold.

Flot also allows zooming in on plots. See that earlier peak around July? We can select that area:

and Flot can show that time period in detail:

All very nice. I've note tested this on Internet Explorer yet, but jQuery and Flot should hide browser-specifics from the author so it should work. This all worked out pretty smoothly, except for a few problems. The biggest one is that if the user resizes the browser window, the plot doesn't resize properly. Not a problem if your DIV element has a fixed width, but I had width: 80% in my CSS. The fix was to redo the plot on a resize event of the window. In jQuery, that's:

$(window).bind("resize",function(eventObject){

plot = $.plot(plotDiv, d, options );

});

If anyone wants some more code, or the full example, then just ask!

Barry

Sunday, 27 December 2009

Thursday, 17 December 2009

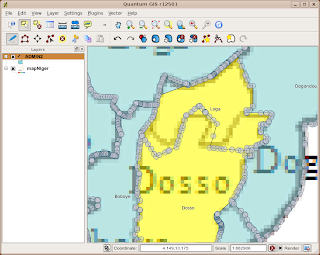

Polygon Shapefile Editing with QGIS

This is a little excursion into editing polygons with some of the new features in Qgis. I'm using a fresh-out-of-SVN of Qgis 1.4. Thanks to whoever fixed the right-click crash bug one hour after I reported it!

I was given a bitmap file representing the new boundaries of regions we are supposed to be working on for a project. I couldn't get this in a spatial format no matter how hard I tried. I did have some similar boundaries but there were a few changes here and there. I loaded the bitmap into the Qgis georeferencer and using my old boundary shapefile matched some points up and created a world file. I could then load the bitmap into Qgis. Here it is:

There's no way I want to redigitize the boundaries when I have something pretty close, and all the overlapping labels on this mean it would be tricky to automatically redigitize the boundaries. Zoom in and see the resolution for yourself.

So I loaded the nearest shapefile and overlaid it, set the fill colour to something transparent (if you set a fill to 'no fill' then you can't see the selection highlight), and activated the magic super labelling from the labelling plugin:

They match up pretty well, but zooming around shows a few problems:

I want to fix the border between Loga and Dosso to better match the underlying raster. First up, activate the editing mode:

Now I hit a problem. Since polygon shapefiles are doubly-digitised, I couldn't see how to move the points on both polygonal boundaries together. And if I moved first one, and then the other, I would probably end up with slivers or polygons that didn't quite fit. I hit on another strategy.

First, select Loga region:

then use the 'Split Feature' tool to cut off a little bit of Loga in the corner away from the edge. This is just to keep the attributes of Loga somewhere, since in a second we're going to merge it with Dosso:

Now we have two features with the same attributes. It's possible that ID numbers have changed here, so don't rely on them. That might explain a bug to appear later... So now, select Dosso and the main part of Loga, and use the Merge Selected Features tool:

This pops up the dialog asking you where the merged feature should get its attributes from. In this case we want the feature to take the attributes of Dosso:

This leaves a few stray bits lying around on the border:

So use the Delete Ring tool to get rid of them:

Now we are going to split Dosso along the new border. First select it:

Then use the Split Feature tool to split by following the border on the underlying raster:

Here's the bug. It's labelled Dosso with 'Loga'. If you check the attribute table at this point it still says 'Dosso', so maybe everything is okay. We'll press on. What we do next is to select the two parts that will make up the new Loga in preparation for another 'Merge Selected Features':

We do the merge, and get the attributes from the little corner of Loga that we cut off earlier:

Now we have the geometry we want:

Except it's still labelled Dosso as Loga. The attribute table seems fine though - if I select Dosso the right line is highlighted in the attribute table:

Never mind, let's turn off the editing and see what happens:

Ooh! It's all looking good! Dosso is Dosso and Loga is Loga.

Now I hope there is an easier way of doing this - a way of editing points along common boundaries such that both polygons are modified. But I can't find it, and I've asked on #qgis and nobody seems to know. If not, then this might be the best way to do it!

I was given a bitmap file representing the new boundaries of regions we are supposed to be working on for a project. I couldn't get this in a spatial format no matter how hard I tried. I did have some similar boundaries but there were a few changes here and there. I loaded the bitmap into the Qgis georeferencer and using my old boundary shapefile matched some points up and created a world file. I could then load the bitmap into Qgis. Here it is:

So I loaded the nearest shapefile and overlaid it, set the fill colour to something transparent (if you set a fill to 'no fill' then you can't see the selection highlight), and activated the magic super labelling from the labelling plugin:

They match up pretty well, but zooming around shows a few problems:

I want to fix the border between Loga and Dosso to better match the underlying raster. First up, activate the editing mode:

Now I hit a problem. Since polygon shapefiles are doubly-digitised, I couldn't see how to move the points on both polygonal boundaries together. And if I moved first one, and then the other, I would probably end up with slivers or polygons that didn't quite fit. I hit on another strategy.

First, select Loga region:

then use the 'Split Feature' tool to cut off a little bit of Loga in the corner away from the edge. This is just to keep the attributes of Loga somewhere, since in a second we're going to merge it with Dosso:

Now we have two features with the same attributes. It's possible that ID numbers have changed here, so don't rely on them. That might explain a bug to appear later... So now, select Dosso and the main part of Loga, and use the Merge Selected Features tool:

This pops up the dialog asking you where the merged feature should get its attributes from. In this case we want the feature to take the attributes of Dosso:

This leaves a few stray bits lying around on the border:

So use the Delete Ring tool to get rid of them:

Now we are going to split Dosso along the new border. First select it:

Then use the Split Feature tool to split by following the border on the underlying raster:

Here's the bug. It's labelled Dosso with 'Loga'. If you check the attribute table at this point it still says 'Dosso', so maybe everything is okay. We'll press on. What we do next is to select the two parts that will make up the new Loga in preparation for another 'Merge Selected Features':

We do the merge, and get the attributes from the little corner of Loga that we cut off earlier:

Now we have the geometry we want:

Except it's still labelled Dosso as Loga. The attribute table seems fine though - if I select Dosso the right line is highlighted in the attribute table:

Never mind, let's turn off the editing and see what happens:

Ooh! It's all looking good! Dosso is Dosso and Loga is Loga.

Now I hope there is an easier way of doing this - a way of editing points along common boundaries such that both polygons are modified. But I can't find it, and I've asked on #qgis and nobody seems to know. If not, then this might be the best way to do it!

Friday, 11 December 2009

Why Screencasts Can Suck

A screencast is an instructional video where you see someone's computer screen as they do something, together with a voice-over of them telling you what they are doing. Google trends shows them starting in 2006 and increasing in search volume ever since. Everyone wants to do a screencast.

But are they being used for the right thing? They have their place, just as a screwdriver is the right tool for putting in screws, but there seems to me to be a lot of people using them in the wrong place.

Here's a few reasons why they suck, together with fixes for ameliorating the suckage:

It's extra work to do this, but then it's extra work to do a screencast properly with transcript, subtitles, or key point markers. Terse and precise text is much better than a rambling voice-over. Screencasts may be great for demonstrating anything that has lots of animation in it, but to demonstrate the operation of a piece of point-and-click software it has it's limitations.

Just sayin'.

But are they being used for the right thing? They have their place, just as a screwdriver is the right tool for putting in screws, but there seems to me to be a lot of people using them in the wrong place.

Here's a few reasons why they suck, together with fixes for ameliorating the suckage:

- Speech and graphics aren't searchable in a browser or indexable in a search engine

- Fix: include a transcript or at least a number of keywords and keyphrases on the page.

- Video is hard to jump to a precise point. If I didn't follow a point, or I want to see how you set something up near the start, I'd like to be able to jump back and forth to key points. This also makes it hard to go through a screencast at your own pace.

- Fix: I think YouTube videos can have chapter points. It may also be possible that screencasting software can give you some links for this. Include them.

- Fix: Alternatively, overlay your screencast with big chunky step numbers or text in the corner. Then as I scroll the fiddly little control back I can see it change from (3) to (2).

- Speech and text can't be cut and pasted. This is a pain with command-line videos, less so with gui clickage.

- Fix: supply a text transcript of commands and speech where appropriate.

- I'm listening to music. The voiceover doesn't go with my tunes.

- Fix: include subtitles. Also helps people with hearing difficulties, which will include me if I carry on listening to Muse at these volumes.

- Loss of resolution. If you are squeezing your entire monitor onto a little YouTube video something is going to get lost. Zoom in if you need to show detail.

- Two Many Misteaks. I don't want to see you mistyping things. Or going "oops". Or stuttering. Or forgetting something. Shout "cut" to yourself and do another take. Or do several takes and edit. Remember you only have to get this right once, thousands of people may have to watch it.

- Nothing happening. Perhaps Andy Warhol would enjoy an unchanging screen with some rambling banter over the top. But I'm not sure everyone else does. This is supposed to be instructable, not minimalist.

It's extra work to do this, but then it's extra work to do a screencast properly with transcript, subtitles, or key point markers. Terse and precise text is much better than a rambling voice-over. Screencasts may be great for demonstrating anything that has lots of animation in it, but to demonstrate the operation of a piece of point-and-click software it has it's limitations.

Just sayin'.

Tuesday, 1 December 2009

Converting an mdb to spatialite

There's loads of data on the European Environment Agency's web site. And I'm looking for data for my Open Source Geospatial course in January. That's next month now. A lot of the data is in shapefile or geoTiff form, which you can read right into Qgis, R, and with a bit of fiddling, OpenLayers. However there are some databases on there in .mdb format. That's Microsoft Access format. Oh great.

Luckily there's a bunch of command-line tools for extracting data from mdb files. Then I can import them into spatialite and add the geographic data to the tables.

Here's what I did. First get the mdb file from the EPER data set downloads. I'm not sure why the March 2008 version is considered later than the August version, but never mind. We unzip and now have EPER_dataset_27-03-2008.mdb to play with. I'm using the bash shell here for my unix commands.

Let's just set a variable to the filename because it's such a fiddle to type:

mdb=EPER_dataset_27-03-2008.mdb

Now fire up spatialite-gui and create a new database called eper.sqlite. It should have some tables in it, namely: geom_cols_ref_sys, geometry_columns, and spatial_ref_sys . Quit spatialite-gui.

The next job is to generate the tables in the spatialite file. You can dump the schema from the mdb file and read it into spatialite easy enough, and I found that the sybase version of the schema was accepted better by spatialite than the default access option, or the oracle version:

mdb-schema $mdb sybase | spatialite eper.sqlite

Ignore the errors, they occur because the schema script tries to drop all tables before creating them.

Next up, read all the table data from the mdb and load into sqlite. I use the -I option of mdb-export to dump SQL INSERT statements, and the -R option to add the semicolons that spatialite needs:

for t in `mdb-tables $mdb` ; do

echo $t

mdb-export -I -R ";\n" $mdb $t |spatialite eper.sqlite

done

All my table names and column names are single words, so I don't need any quotes or the -S option to sanitize names. That's not always the case.

Now we have to spatially enable the Facility table. Fire up the gui again on the eper.sqlite file. You should see the new tables.

The Facility table has Longitude and Latitude columns which look like WGS84 coords, and indeed there is a Geographic Coordinate System column. Let's just check:

SELECT * FROM "Facility" where GeographicCoordinateSystem <> 'WGS84'

Oh dear. 28 of them appear to be in ETRS89. We'll ignore that for now, but it's always good to check.

The table needs a geometry column, so we add it thus:

SELECT AddGeometryColumn('Facility', 'the_geom', 4326, 'POINT', 2);

And now we fill that column by making points from the Lat/Long columns:

UPDATE Facility SET the_geom = MakePoint(Longitude,Latitude,4326)

From that point we can load the database in Qgis 1.4.x using the spatialite layer and plot the points, query them etc etc.

The database is a complex beast if you wish to relate the other emissions data to the facility points. I've constructed what I think is an approximate picture of the relations between the tables, but I may be wrong!

The PDF/DBDesigner XML of this is available from me if you ask nicely! You could probably use this now to do stuff like creating a view in spatialite that mapped all emissions of a certain substance in various countries, or summarise emissions counts in countries and so on. There's a Flash Interface to the EPER data, but it could easily be done in OpenLayers... Anyone?

Anyway, that's how to get some mdb database into spatialite and plot it in Qgis!

Luckily there's a bunch of command-line tools for extracting data from mdb files. Then I can import them into spatialite and add the geographic data to the tables.

Here's what I did. First get the mdb file from the EPER data set downloads. I'm not sure why the March 2008 version is considered later than the August version, but never mind. We unzip and now have EPER_dataset_27-03-2008.mdb to play with. I'm using the bash shell here for my unix commands.

Let's just set a variable to the filename because it's such a fiddle to type:

mdb=EPER_dataset_27-03-2008.mdb

Now fire up spatialite-gui and create a new database called eper.sqlite. It should have some tables in it, namely: geom_cols_ref_sys, geometry_columns, and spatial_ref_sys . Quit spatialite-gui.

The next job is to generate the tables in the spatialite file. You can dump the schema from the mdb file and read it into spatialite easy enough, and I found that the sybase version of the schema was accepted better by spatialite than the default access option, or the oracle version:

mdb-schema $mdb sybase | spatialite eper.sqlite

Ignore the errors, they occur because the schema script tries to drop all tables before creating them.

Next up, read all the table data from the mdb and load into sqlite. I use the -I option of mdb-export to dump SQL INSERT statements, and the -R option to add the semicolons that spatialite needs:

for t in `mdb-tables $mdb` ; do

echo $t

mdb-export -I -R ";\n" $mdb $t |spatialite eper.sqlite

done

All my table names and column names are single words, so I don't need any quotes or the -S option to sanitize names. That's not always the case.

Now we have to spatially enable the Facility table. Fire up the gui again on the eper.sqlite file. You should see the new tables.

The Facility table has Longitude and Latitude columns which look like WGS84 coords, and indeed there is a Geographic Coordinate System column. Let's just check:

SELECT * FROM "Facility" where GeographicCoordinateSystem <> 'WGS84'

Oh dear. 28 of them appear to be in ETRS89. We'll ignore that for now, but it's always good to check.

The table needs a geometry column, so we add it thus:

SELECT AddGeometryColumn('Facility', 'the_geom', 4326, 'POINT', 2);

And now we fill that column by making points from the Lat/Long columns:

UPDATE Facility SET the_geom = MakePoint(Longitude,Latitude,4326)

From that point we can load the database in Qgis 1.4.x using the spatialite layer and plot the points, query them etc etc.

The database is a complex beast if you wish to relate the other emissions data to the facility points. I've constructed what I think is an approximate picture of the relations between the tables, but I may be wrong!

Anyway, that's how to get some mdb database into spatialite and plot it in Qgis!

Monday, 16 November 2009

Choropleth mapping challenge in Qgis

Making choropleth maps - how hard can it be? It's just a bunch of data colouring another bunch of polygons, right?

This all started over at Flowing Data where Nathan used Python to hack an existing SVG file to colour the counties of the US according to data read in from a file of county unemployment levels. Dave Smith of Revolution Computing issued a challenge: do this in R. I'm always up for a challenge so I found a shapefile of the US counties with the FIPS county codes, and then using the rgdal and sp packages had a plot up in about twenty minutes. The next day Dave posted the challenge results.

Today on the R-Sig-Geo mailing list there has been a bit of traffic on using R or GIS for cartography. Various people arguing one way or the other about how what constitutes 'publication quality' and various opinions bouncing around. So I thought I'd revisit the challenge - this time using open-source GIS software.

Problem one is getting the unemployment data and map data - the unemployment data is linked as a CSV file from the Flowing Data blog entry, and shapefiles of US counties are downloadable after a quick google search.

There are various variations of the US counties depending on the time they were defined, whether Alaska and Hawaii are in their proper place or tucked neatly around the 'lower 48' to make mapping easier, and whether the data has the right FIPS codes. I found a shapefile with county data from 2000, full FIPS codes, and with AK and HI slotted in.

The next step is to create a geographic data set with the unemployment data as attributes. But first I had to fiddle with the CSV file. It had the FIPS codes in two parts - one for the state and one for the county. The full FIPS code is five digits, made up from the two parts with leading zeroes. I didn't want to use R or Python for this, so I loaded the CSV into Calc, the spreadsheet component of OpenOffice.

The first thing I did was to shift all the data down one row and add some headers. The only important ones are the two parts of the FIPS codes and the unemployment percentage.

To create the full FIPS code I have to concatenate the two parts (in columns B and C) with the leading zeroes - I put this formula into J2 and copy it down the column:

=CONCATENATE(TEXT(B2;"00");TEXT(C2;"000"))

then I add the word 'FIPS' as the header in J1 for this column.

Now I have a spreadsheet with a FIPS and an UNEMPLOYMENT column, and a shapefile with a FIPS attribute. How do I join them?

A couple of ways spring to mind:

In the Tools menu of Qgis I found the Data Management options and the Join Attributes function. Exactly what I needed! I set my Join Data to be the DBF of the unemployment data and FIPS to be the field to join with. Hit OK, and in under a minute I had a shapefile with the DBF data in place.

The new layer was loaded. Now all that was left was a bit of clicking in the Layer.. Properties.. Symbology dialog to colour the map in six classes with the same intervals as in the challenge, and using the same colours from the Color Brewer system. I also overlaid a state map with transparent fill and a thick solid white outline to get the same effect as the challenge map.

Here's how it looks in Qgis:

Note the large chunk of Alaska missing - that's a non-matching FIPS code. Oh well, nothing's perfect!

Qgis also has a map composer where you can do basic page layout for cartography. Here's one rendering of the unemployment map:

Here you can add layers, legends, and text, and also play with fonts and spacing and sizes. The maps can be output to SVG and PDF.

I'd call these publication-quality - at least they're a better quality than many maps I've seen published (wacky handwriting font excepted).

This has taken me about 45 minutes while waiting for the rain to stop so I can go home. Any gvSIG orArcGIS people out there want to have a go?

This all started over at Flowing Data where Nathan used Python to hack an existing SVG file to colour the counties of the US according to data read in from a file of county unemployment levels. Dave Smith of Revolution Computing issued a challenge: do this in R. I'm always up for a challenge so I found a shapefile of the US counties with the FIPS county codes, and then using the rgdal and sp packages had a plot up in about twenty minutes. The next day Dave posted the challenge results.

Today on the R-Sig-Geo mailing list there has been a bit of traffic on using R or GIS for cartography. Various people arguing one way or the other about how what constitutes 'publication quality' and various opinions bouncing around. So I thought I'd revisit the challenge - this time using open-source GIS software.

Problem one is getting the unemployment data and map data - the unemployment data is linked as a CSV file from the Flowing Data blog entry, and shapefiles of US counties are downloadable after a quick google search.

There are various variations of the US counties depending on the time they were defined, whether Alaska and Hawaii are in their proper place or tucked neatly around the 'lower 48' to make mapping easier, and whether the data has the right FIPS codes. I found a shapefile with county data from 2000, full FIPS codes, and with AK and HI slotted in.

The next step is to create a geographic data set with the unemployment data as attributes. But first I had to fiddle with the CSV file. It had the FIPS codes in two parts - one for the state and one for the county. The full FIPS code is five digits, made up from the two parts with leading zeroes. I didn't want to use R or Python for this, so I loaded the CSV into Calc, the spreadsheet component of OpenOffice.

The first thing I did was to shift all the data down one row and add some headers. The only important ones are the two parts of the FIPS codes and the unemployment percentage.

To create the full FIPS code I have to concatenate the two parts (in columns B and C) with the leading zeroes - I put this formula into J2 and copy it down the column:

=CONCATENATE(TEXT(B2;"00");TEXT(C2;"000"))

then I add the word 'FIPS' as the header in J1 for this column.

Now I have a spreadsheet with a FIPS and an UNEMPLOYMENT column, and a shapefile with a FIPS attribute. How do I join them?

A couple of ways spring to mind:

- Load everything into PostGIS and do a join or create a view

- Create a shapefile with the Unemployment data in the DBF

In the Tools menu of Qgis I found the Data Management options and the Join Attributes function. Exactly what I needed! I set my Join Data to be the DBF of the unemployment data and FIPS to be the field to join with. Hit OK, and in under a minute I had a shapefile with the DBF data in place.

The new layer was loaded. Now all that was left was a bit of clicking in the Layer.. Properties.. Symbology dialog to colour the map in six classes with the same intervals as in the challenge, and using the same colours from the Color Brewer system. I also overlaid a state map with transparent fill and a thick solid white outline to get the same effect as the challenge map.

Here's how it looks in Qgis:

Note the large chunk of Alaska missing - that's a non-matching FIPS code. Oh well, nothing's perfect!

Qgis also has a map composer where you can do basic page layout for cartography. Here's one rendering of the unemployment map:

Here you can add layers, legends, and text, and also play with fonts and spacing and sizes. The maps can be output to SVG and PDF.

I'd call these publication-quality - at least they're a better quality than many maps I've seen published (wacky handwriting font excepted).

This has taken me about 45 minutes while waiting for the rain to stop so I can go home. Any gvSIG orArcGIS people out there want to have a go?

Thursday, 5 November 2009

Workshop Report

I've just got back from London after a trip for a one-day workshop on mapping software in health research organised by The Infectious Disease Research Network. It was held at the Royal Geographical Society buildings - a wonderful place filled with history from RGS expeditions of the past.

The day was arranged by Mike Head of the IDRN and the meeting chaired by Prof. Graham Moon of Southampton University. The talks were varied enough to sustain interest for over a hundred people stuck in one room for the day. The applied talks before lunch combined the usual stories of fieldwork adventures with the gritty details of database servers and web mapping platforms.

During lunch I set up my e-presentation. It was a series of animated slides talking about the use of open-source geospatial software. I also took the opportunity to distribute some flyers for my course in January and the OSGeo group. I talked through my case-studies several times to interested people and was losing my voice by the time I was finishing off my little sticky chocolate cake.

After lunch two talks grabbed the audience - Tim Fendley's amusing tales of new map systems for pedestrians around London was illustrated by screenfuls of comic signage, many of which I encountered on my London wanderings the day after. Chris Phillips from MapAction talked about the work of getting maps and GIS technology out to disaster areas to help co-ordinate search, rescue, and aid deployment.

The final talk was from Mikaela Keller on the Healthmap.org project. This is a system that takes news feeds and health data and maps it in real time. She talked about how a combination of computer language processing and human scanning produce reliable maps. I'm still not sure how you could use this data to do rigorous statistical analysis but I don't think that's the point of it.

After a summing-up from Graham we adjourned for wine and juice outside the hall, and then as numbered dwindled we remaining few jumped into a couple of taxis and headed for the John Snow pub in Soho. Dr John Snow was perhaps the first person to combine health and mapping when he plotted cases of cholera in 1854 and concluded the source was a water pump near where the pub that bears his name now stands. We raised our glasses to the good doctor after a day of discussion of the sort of work made possible by standing on his shoulders.

The day was arranged by Mike Head of the IDRN and the meeting chaired by Prof. Graham Moon of Southampton University. The talks were varied enough to sustain interest for over a hundred people stuck in one room for the day. The applied talks before lunch combined the usual stories of fieldwork adventures with the gritty details of database servers and web mapping platforms.

During lunch I set up my e-presentation. It was a series of animated slides talking about the use of open-source geospatial software. I also took the opportunity to distribute some flyers for my course in January and the OSGeo group. I talked through my case-studies several times to interested people and was losing my voice by the time I was finishing off my little sticky chocolate cake.

After lunch two talks grabbed the audience - Tim Fendley's amusing tales of new map systems for pedestrians around London was illustrated by screenfuls of comic signage, many of which I encountered on my London wanderings the day after. Chris Phillips from MapAction talked about the work of getting maps and GIS technology out to disaster areas to help co-ordinate search, rescue, and aid deployment.

The final talk was from Mikaela Keller on the Healthmap.org project. This is a system that takes news feeds and health data and maps it in real time. She talked about how a combination of computer language processing and human scanning produce reliable maps. I'm still not sure how you could use this data to do rigorous statistical analysis but I don't think that's the point of it.

After a summing-up from Graham we adjourned for wine and juice outside the hall, and then as numbered dwindled we remaining few jumped into a couple of taxis and headed for the John Snow pub in Soho. Dr John Snow was perhaps the first person to combine health and mapping when he plotted cases of cholera in 1854 and concluded the source was a water pump near where the pub that bears his name now stands. We raised our glasses to the good doctor after a day of discussion of the sort of work made possible by standing on his shoulders.

Wednesday, 7 October 2009

Pushing OpenSource in a grant bid

Today I've been writing a section on a research grant (as well as fixing the prof's typos and spellong misteaks). It's great to be able to put the following into a proposal:

The computing infrastructure will include a database server (running PostGIS), a front-end (running Apache and serving maps via OpenLayers and maybe WFS/WMS services), and two compute engines churning out statistics with R. It'll be fun to put all these things together if we get the grant. I'm sure I'll blog the result when we get it.

"Software and accompanying documentation and training tools developed and released during the programme will be made available under an Open Source license. This will maximise its accessibility to users, especially those working in health institutions in developing countries. It also enables the project to accept improvements and fixes in the software from the wider community.

The project will also commit to using Free and Open Source software as widely as possible within the research team, so that software solutions are developed that do not have proprietary components as part of their architecture".

The computing infrastructure will include a database server (running PostGIS), a front-end (running Apache and serving maps via OpenLayers and maybe WFS/WMS services), and two compute engines churning out statistics with R. It'll be fun to put all these things together if we get the grant. I'm sure I'll blog the result when we get it.

Saturday, 3 October 2009

Tools I Use

This is just a list of software I use for work. Is there some way I can make these keywords on the blog?

- Ubuntu Linux - the free operating system

- R - statistics software

- Python - flexible programming language

- Quantum GIS - desktop GIS with Python extensibility

- PostGIS - spatial database

- OpenLayers - Javascript client-side web maps

GeoIntroduction

Morning all. This is my new blog site for all things generally work-related.

I work in the School of Health and Medicine at Lancaster University within the 'CHICAS' group - Combining Health Information, Computation, and Statistics'. Basically a group of numerates doing data analysis of anything health or biomedicine related.

Most of my activity centres round geographic data, so here I'll be posting useful snippets, questions, comments, rants and opinions, which will not necessarily be those of my employer. I might also wander off into general computing and IT areas.

I work in the School of Health and Medicine at Lancaster University within the 'CHICAS' group - Combining Health Information, Computation, and Statistics'. Basically a group of numerates doing data analysis of anything health or biomedicine related.

Most of my activity centres round geographic data, so here I'll be posting useful snippets, questions, comments, rants and opinions, which will not necessarily be those of my employer. I might also wander off into general computing and IT areas.

Subscribe to:

Comments (Atom)